Legal Q&A

Answer people's questions about their problem situation, giving brief information to help them understand their legal issues, weigh options, connect to resources, and make a plan for next steps.

Task Description

When people face a stressful legal problem—like eviction, debt collection, family separation, or losing benefits—they often don’t know where to start. They have questions, confusion, and fear, but no clear pathway to help. Whether they arrive at a legal help website, talk to a junior staff member, or approach a pro bono clinic, the first challenge is understanding whether their problem is legal, what rights they have, and what steps to take.

This task involves a system that functions as a conversational legal triage and orientation assistant. The person describes their problem in their own words—typed, spoken, or uploaded—and the system engages them in a guided back-and-forth. It asks clarifying questions, listens for key facts (like deadlines, geography, or issue type), and tailors its responses accordingly. At the end of the interaction, it provides a clear summary of the issue, explains rights and risks, and offers links to trusted legal help, tools, or organizations.

This AI assistant doesn’t give legal advice or make legal decisions. It focuses on orientation, triage, and empowerment—bridging the gap between legal complexity and human need. For staff, it can also act as a co-pilot—suggesting answers or follow-ups to junior staff, volunteers, or navigators working with clients live.

This tool is valuable for legal help websites, courts, pro bono projects, and community-based service networks. It reduces the burden on overwhelmed help lines or intake teams, while offering 24/7 guidance and navigation to people in need.

Success means the person understands their legal situation, feels more confident, and takes strategic next steps—whether that’s filling out a form, contacting a legal aid group, or preparing for a hearing.

Primary audiences

Legal help website users, legal navigators, pro bono volunteers, junior staff.

Quantitative Summary of Stakeholder Feedback

Legal help teams prioritized the Q&A task as a very high priority.

- Average Value Score: 4.53 (High)

- Collaboration Interest: 3.42 (Medium)

This was rated as one of the most valuable ideas, though slightly less consensus on whether it should be pursued in a federated/national way.

Existing Projects

There are a number of chatbot pilots and tools already in production:

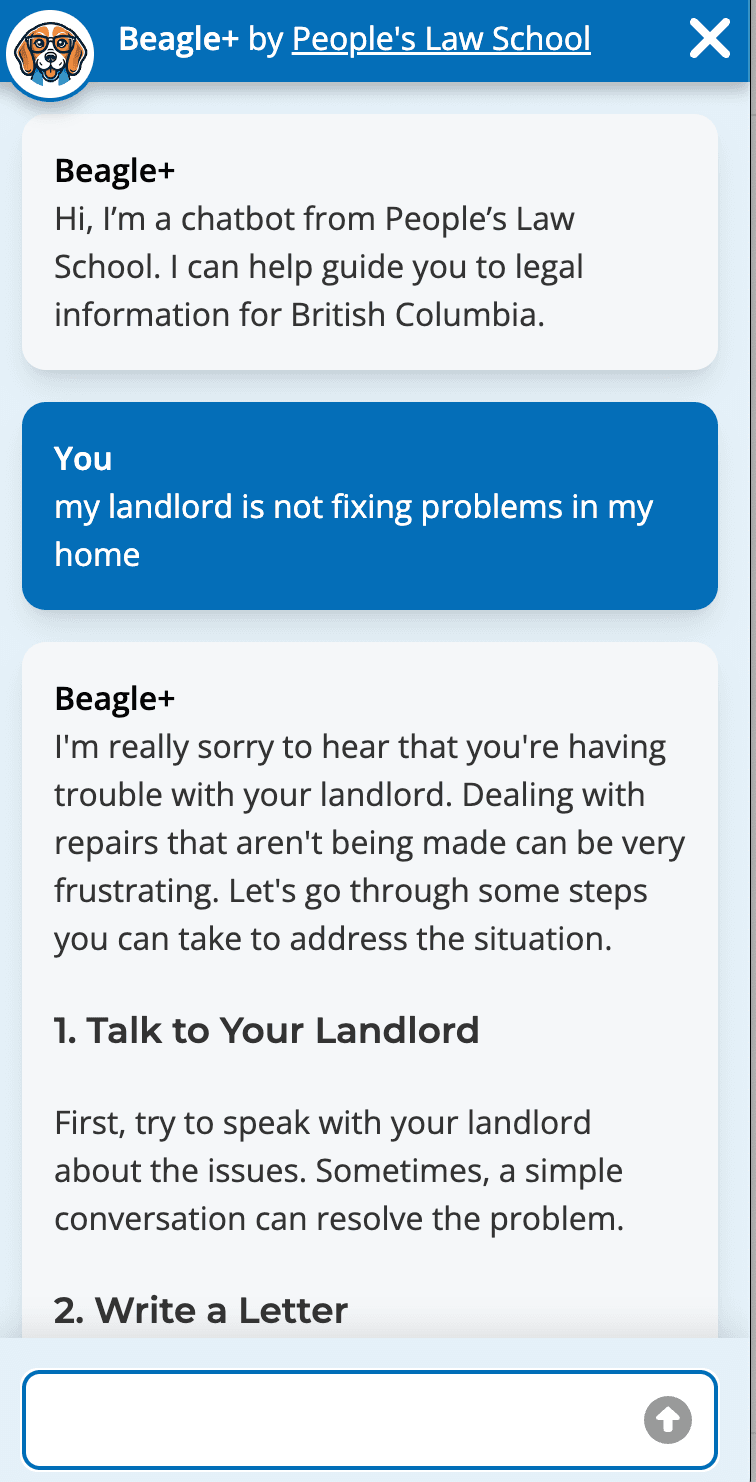

- Beagle+ from People's Law School in British Columbia

- Ask ILAO (Illinois Legal Aid Online): A conversational legal help assistant.

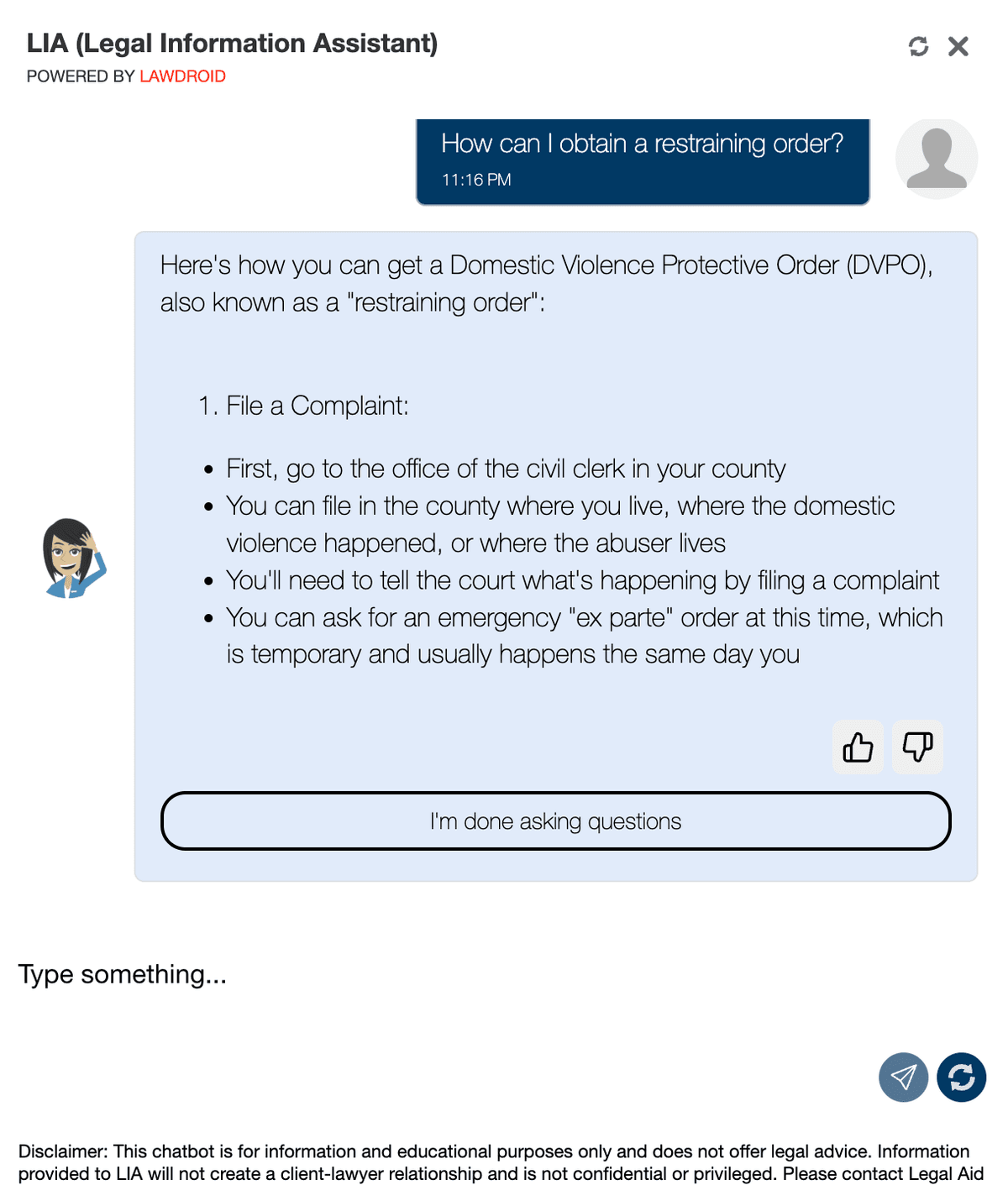

- LIA (North Carolina): A guided help bot.

- Texas Legal Services Center’s “Amigo” bot: Designed to walk users through legal needs.

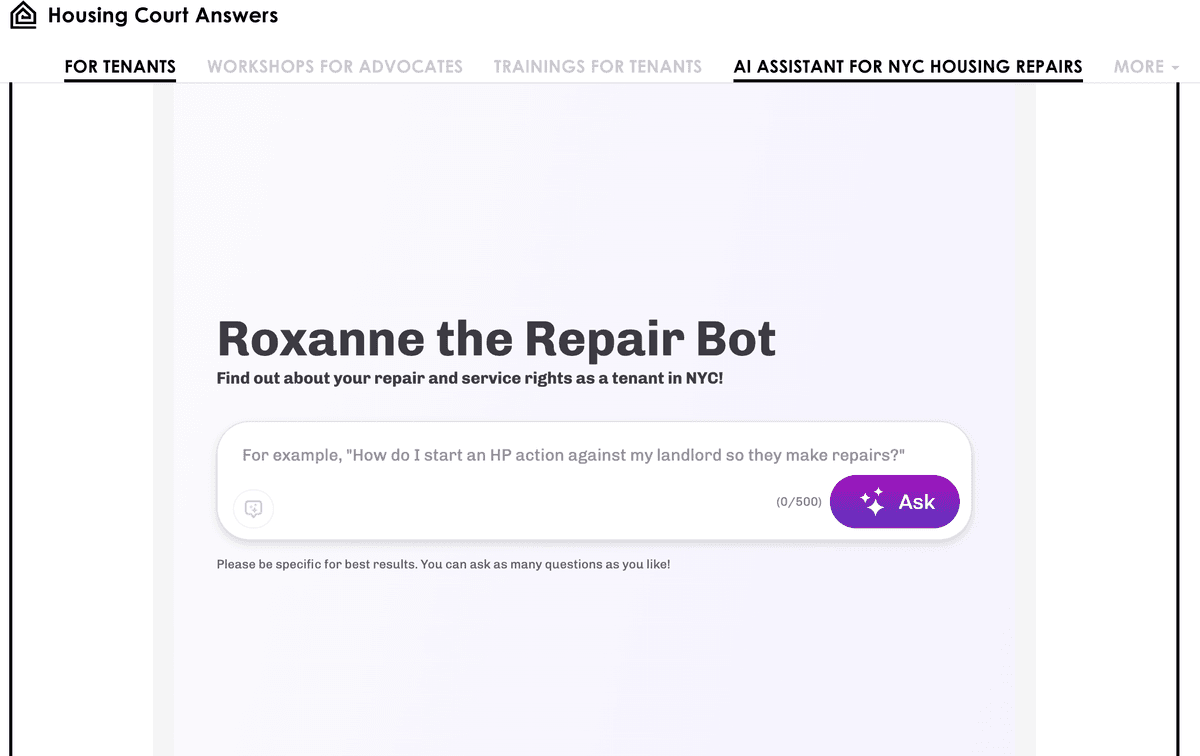

- Roxanne the Repair Bot in New York

- A TIG-funded bot in New Mexico.

- NCSC-supported projects in Alaska and beyond.

- References to Quentin Steenhuis’s tools, likely DocAssemble-powered bots.

Technical Resources & Protocols

OpenSource Libraries to build on top of LLMs, like LangGraph and Deep Chat

Vector Databases for Rag like Milvus

Drupal Modules like AI Search API

Vendors like LawDroid, Josef, etc.

Data & Tech Collaboration Ideas

Shared Content & Training Sets: could we use existing self-help resources, RAG bots, and training data to build new ones?

Can we use Guided Interviews to teach the AI how to structure information into digestible bits?

Retrieval-Augmented Generation (RAG): Must pull from structured guide content, not just scraped web text

Need structured metadata on legal help pages

Requires chat logs + gold-standard answer sets for fine-tuning

Plain-language response scaffolds

Stakeholder Commentary on this task

Responses varied in confidence:

- Wary of overpromising: One legal aid leader warned that chatbots can appear helpful while giving dangerously wrong advice.

- Need for caution in federated scaling: Several noted that local laws, content quality, and infrastructure vary greatly.

- Appetite for internal copilot use: Interest was stronger for using AI to support human navigators rather than offering it directly to the public.

“I am a bit wary of a public-facing tool that is doing legal triage. If it’s not correct, it can cause harm.”

“From what I’ve seen, most of the more reliable bots are built with much more limited scopes.”

How to Measure Quality?

The Legal Design Lab has developed a Quality Rubric for systems' performance at Legal Q&A tasks. This was developed using extensive interviews and scenario exercises with users and frontline experts. Read more about the Rubric creation process here.

Protocols to Use in Evaluation

Aside from these metrics, what are ways to collect data on performance? Some stakeholders have suggested the following:

- Accuracy measurement: Have regular reviews by human or LLM judges to keep track of the objective measurements of accuracy of legal or procedural details.

- Participation, engagement, and follow-through rates: “Compare use against baseline. For instance, our existing bot increased FAQ engagement by 30%.” Do users in fact stay chatting with the system? Do they then take the next step, recommended by the system?

- Regular auditing: Expert review of real or synthetic test cases review, in which the system is assessed according to the metrics. The review can go beyond accuracy to also include the other factors. As one expert said, “We would need to carefully track conversations and make sure there is human review and testing. Metrics alone won’t tell you what’s going wrong.”

- Follow-up surveys with users to see how they would rate, improve, or change the system to better serve their needs and produce better outcomes. Users should be able to easily give feedback on their experience. This could be a simple Thumbs Up/Down button, a NPS 1-question survey, or something more extensive.

- User quizzes to assess comprehension and satisfaction, either in a simulated or live system. Does this system help the person accurately understand their scenario, see the next steps, and make a strategic choice?

Related Projects

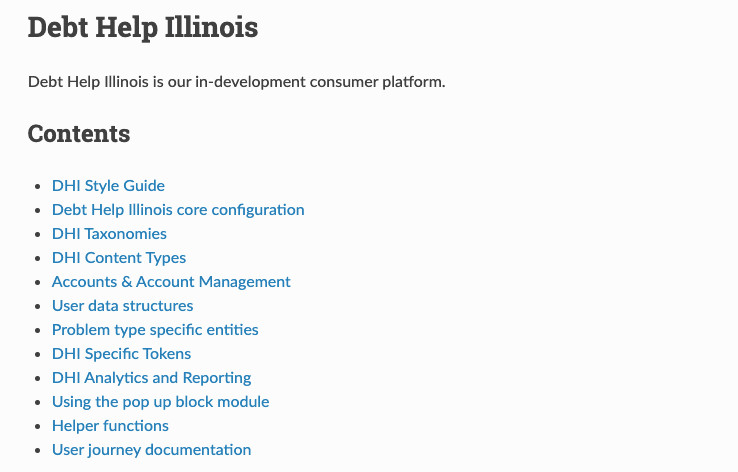

Debt Help IL

An AI-supported resource that provides Illinois consumers and community helpers with tailored legal and financial information to address debt proactively and reduce downstream harms.

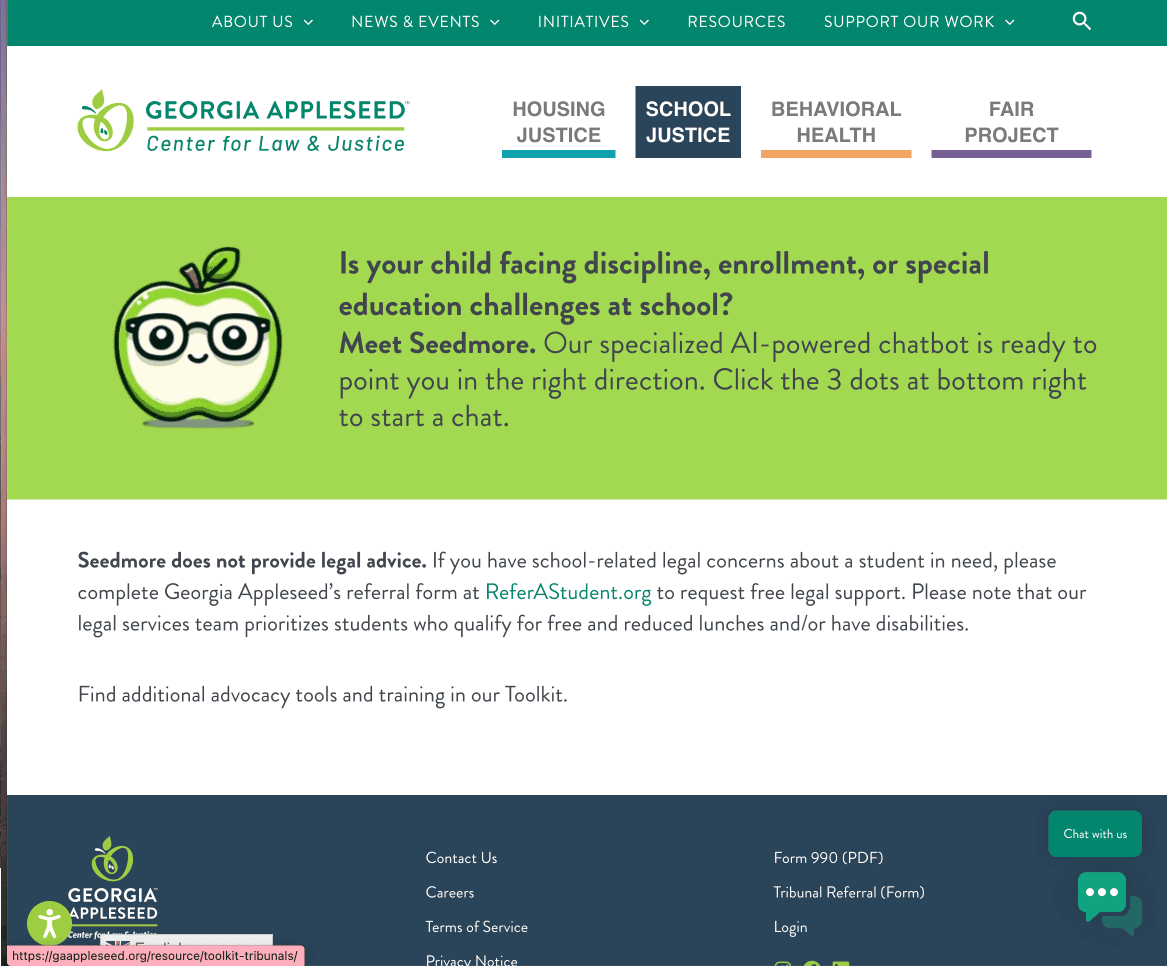

Seedmore chatbot

An AI-supported school discipline and special education navigator that helps parents understand school discipline, enrollment, and special education processes in Georgia, using curated legal and advocacy materials.

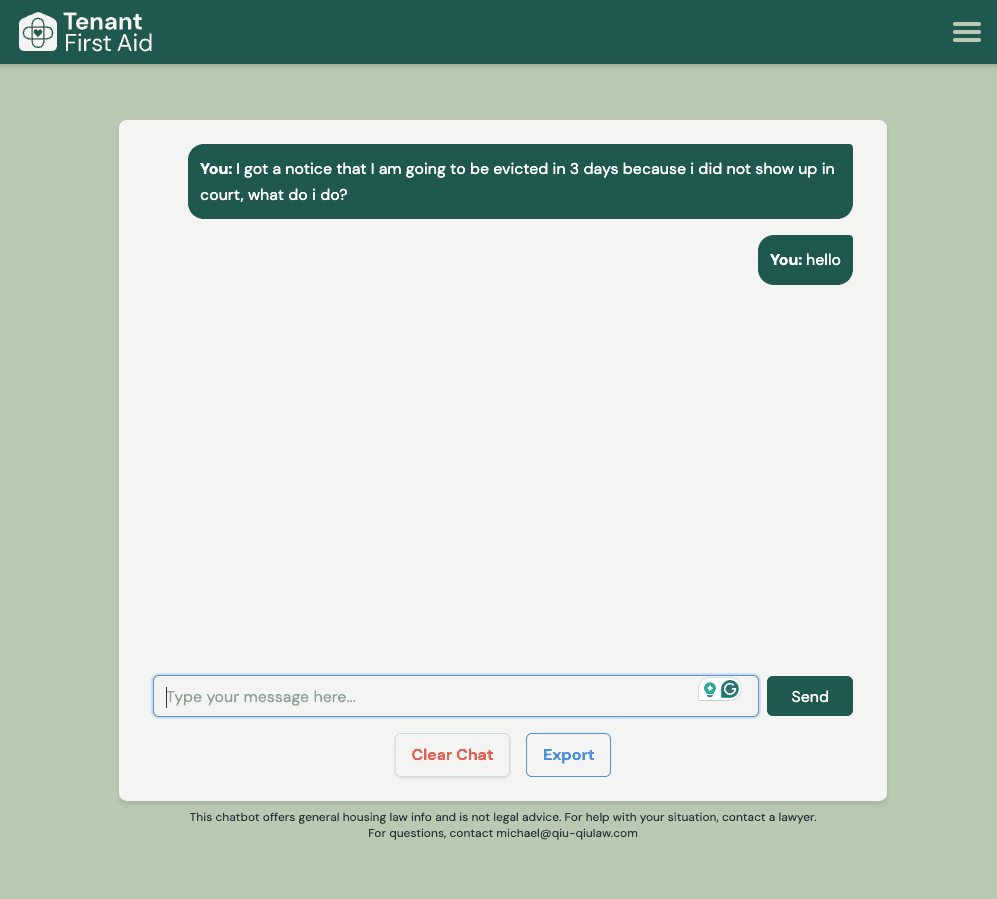

Tenant First Aid

An AI-powered chatbot for Oregon renters facing eviction or landlord disputes. Developed by Code PDX and Qiu Qiu Law, it helps tenants understand their rights and take action.

Maria

A free, privacy-preserving AI chatbot from Honduras’ State Secretariat for Security (with UNDP/Infosegura and USAID support) that helps women, girls, and adolescents identify gender-based violence, understand options, and connect to appropriate services, including Linea 114.

Sophia Chat

A confidential, 24/7 AI chatbot from Swiss NGO Spring ACT that provides expert-reviewed information on domestic violence, safety planning, legal options, and connections to local services—available anonymously worldwide in 25+ languages.

LeaseChat

LeaseChat is a free tool that analyzes residential leases, answers questions with references to the document and local law, and drafts landlord communications.

JusticeBot

An AI-powered chatbot from the University of Montreal that helps the public in Quebec understand landlord-tenant rights and responsibilities, using trusted legal information.

Violetta

A Spanish-language, supervised-ML WhatsApp chatbot that helps users name and understand relationship violence, get tailored guidance, and connect anonymously to human support (including the “Purple Line”) and legal resources in Mexico.

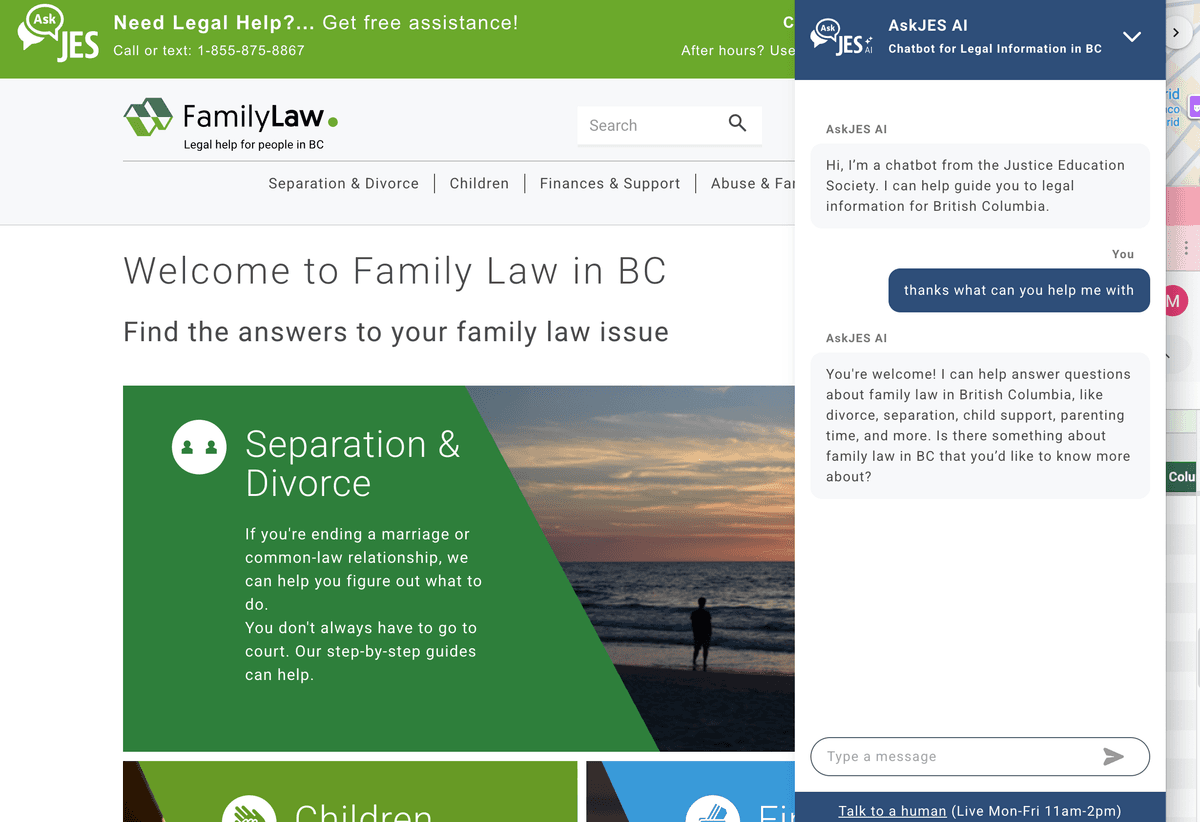

Beagle+

Beagle+ is an AI-powered legal information assistant developed for British Columbia that uses retrieval-augmented generation (RAG) to deliver accurate, jurisdiction-specific legal guidance.

Reclamo AI

A multilingual, mobile-first AI assistant that helps workers in New York understand their wage rights, spot wage theft, and connect to legal aid.

Nevada Family Court chatbot

An AI-supported, multilingual assistant on Nevada’s court self-help site that provides information and guided steps for common family-court processes, grounded in court-approved materials.

LIA

An AI-powered virtual legal assistant from LANC that helps North Carolinians navigate common civil legal problems with plain-language, bilingual guidance and referrals.

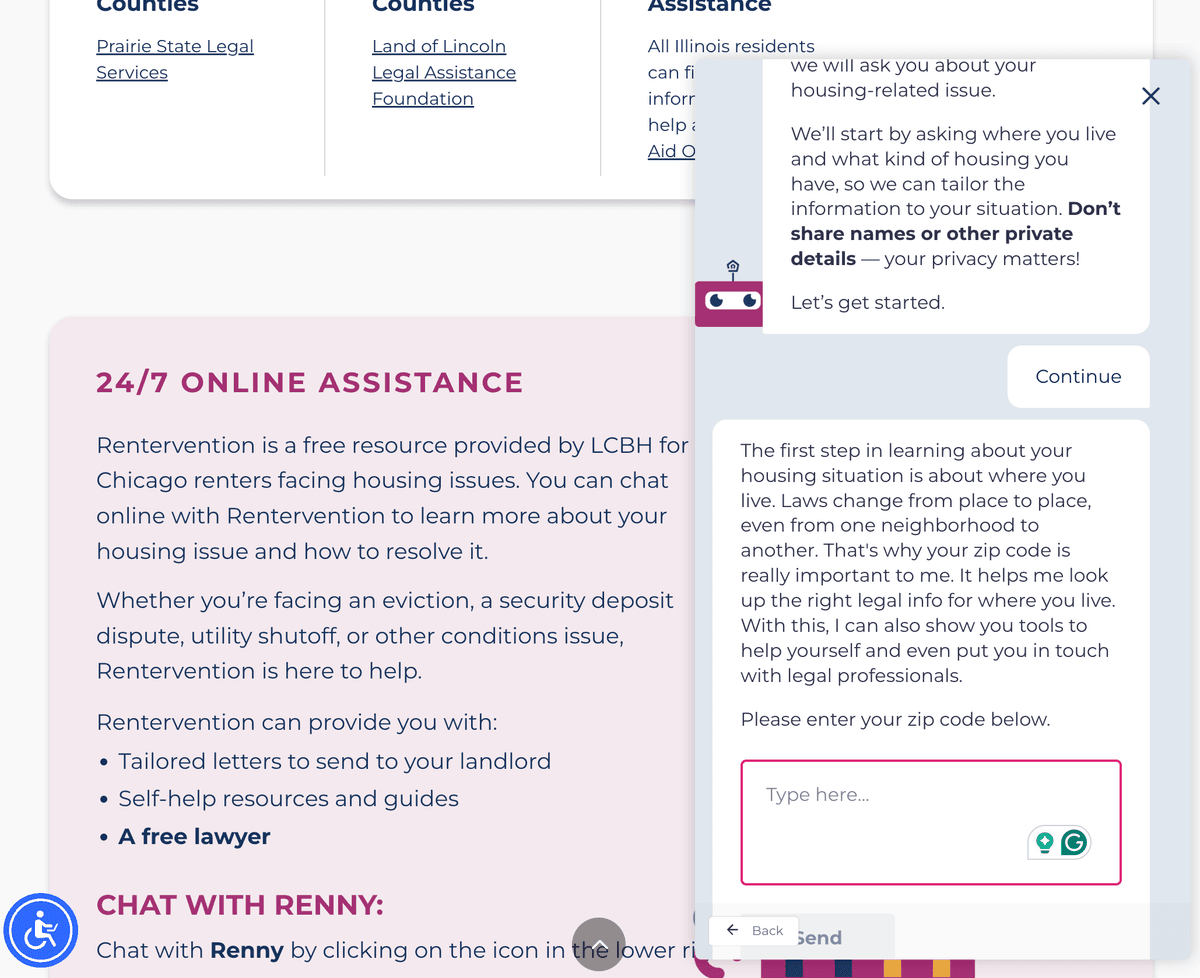

Rentervention

A free, confidential help pathway for Chicago renters that uses an online assistant (“Renny”) to explain rights, draft landlord letters, connect to brief legal advice, and route tenants to appropriate community resources.

Navi community support chatbot

An AI-powered chatbot that helps community members identify legal issues, find appropriate referrals, and access self-help resources in plain language.

Roxanne the Repair Bot

Roxanne is a chatbot that provides NYC tenants with general guidance on how to get housing repairs, based on trusted information from Housing Court Answers.

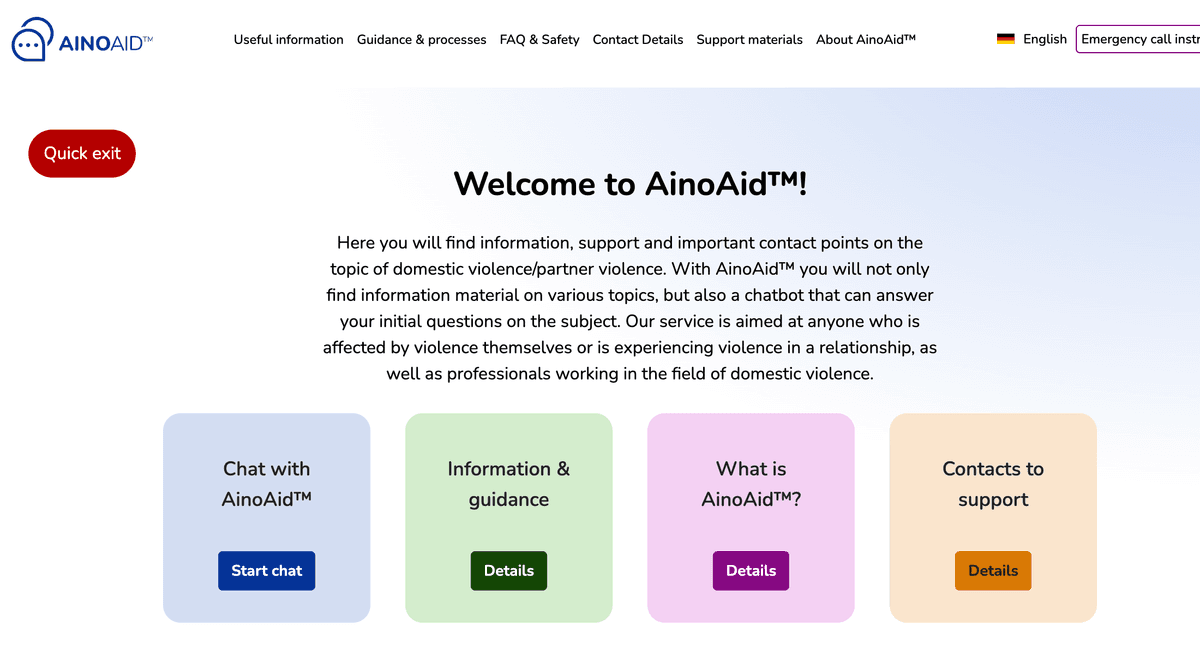

AinoAid

An EU-funded chatbot that offers victims and frontline professionals fast, privacy-preserving guidance on domestic/partner violence, risk awareness, and connections to services—while collecting anonymized signals to improve institutional response.

JES Ask AI

A closed-domain AI assistant that answers family-law questions using only legally reviewed content from FamilyLawInBC.ca, paired with live human help during peak hours.