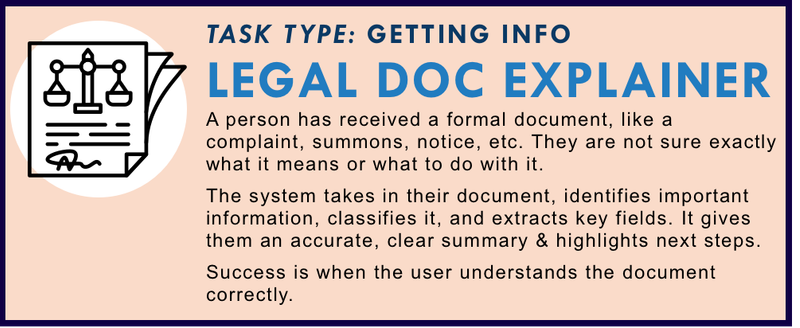

Document Explainer

Help people understand legal documents they’ve received (like notices, summons, and lawsuit documents) by summarizing what the document means and what to do next.

Task Description

When someone receives a legal document—like a court summons, complaint, eviction notice, or government letter—it often sparks confusion, fear, or inaction. These documents are written in formal legal language, filled with deadlines and procedural references, and often offer no guidance on what the recipient should actually do.

This task centers on a system that acts as a legal document explainer and action guide for everyday people. The person uploads or photographs the document. The system scans it, identifies what kind of document it is, extracts key fields (like deadlines, case numbers, court names, or parties), and presents a clear summary: what it is, what it means, and what steps need to be taken next.

Importantly, the system doesn't just translate legal terms—it puts the document in context. It may explain: "This is a court summons. You have been sued by your landlord. You must respond by [date] or you may lose your case." It can flag high-risk issues, link to appropriate help guides or legal aid services, and offer next-step tools (e.g., start a response form).

This tool is especially useful for self-represented litigants, people in housing or debt disputes, recipients of benefit denial notices, or anyone navigating legal paperwork without a lawyer. It helps people take timely, informed action rather than missing critical steps.

Success means the person correctly understands what the document is, what it demands or communicates, and what actions they must take—and feels more confident taking those actions.

Goal: Help users and legal aid teams understand the contents, deadlines, and risks in legal documents — and possibly generate or review responses.

Ideally, a tool could:

- Read and explain legal documents in plain language (e.g., eviction notices, court filings, benefit denial letters)

- Extract key fields for downstream use (e.g., in intake forms or triage)

- Review user-created legal filings and flag defects or missing information before submission

There are two major use cases:

- Helping the public understand legal documents they’ve received

- Helping providers check the quality or compliance of outgoing documents

Primary audiences: Self-represented litigants, intake workers, legal aid attorneys, and pro bono volunteers

Quantitative Summary of Stakeholder Feedback

- Average Value Score: 4.26 (High)

- Collaboration Interest: 3.84 (Moderately high)

It ranked in the top half for both impact and collaboration potential. Stakeholders see clear benefits, but noted caution is needed for accuracy and jurisdictional differences.

Existing Projects

Respondents noted this space is developing rapidly thanks to general AI tools like GPT-4 and Claude:

- GenAI tools like ChatGPT and Claude already do passable document summaries

- Document analysis tools by Upsolve and internal bots by ILAO and Texas groups

- Legal aid pilots:, Bots that extract fields from eviction notices, denial letters

- Narrative generators that analyze input forms to create affidavits

- Eviction notice interpreters in some jurisdictions

- Public interest projects on AI-generated plain language explanations for court orders

“I think most off-the-shelf GenAI can scan and summarize filings. What we need is training on specific doc types.”

“We’re piloting AI that flags missing items in housing filings before attorneys submit them.”

“Commercial products exist for contract review — but we need them for housing, benefits, and debt notices.”

Technical Next Steps & Protocols Needed

- Labeled sample documents for eviction, benefits, child custody, etc.

- Annotated with: issue, key facts, deadline, required response

- Field extraction templates

- Legal-specific plain language explanation templates

- UI to allow human review and override

- Integration with court or intake systems (e.g., pre-fill LegalServer)

“Use court records to test identification accuracy — how often does it extract the right case number or response deadline?”

“The tool should explain why something matters, not just extract fields.”

Possible Next steps

- Start with narrow use cases: eviction notices, SNAP denial letters

- Use AI to assist staff review rather than make final decisions

- Pair with document creation tools: explain → draft → file loop

- Build feedback loops: “Was this helpful?” + legal check

“If AI lives up to its promise, we’ll eventually have a tool that checks a user’s draft like TurboTax checks your tax return.”

Stakeholder Commentary

- Strong support for document interpretation for laypeople—especially in housing, benefits, and family law.

- More skepticism about automated “review and flag” systems for filings—seen as harder to get right and higher risk.

- Context sensitivity is a recurring challenge: different jurisdictions have different doc types and triggers.

“A tool that tells you whether your filing is sufficient would be amazing—but if it’s wrong, it could be devastating.”

“Even judges can’t always agree on what makes a good narrative or what’s missing in a form. This needs human backup.”

- Legal accuracy is risky at scale: Misinterpretation could lead to eviction or missed deadlines

- Some stakeholders skeptical of fully automated review:

“I do not trust AI to handle this job competently — yet.”

- High variance in document formats: Layouts differ by court, landlord, agency

“Even judges can’t always agree on what makes a good narrative or what’s missing in a form.”

“This would be hard to do nationally because even local documents vary wildly.”

How to Measure Quality?

📑 Document Type Identification

- Correctly classifies the document (e.g., summons, complaint, notice, denial letter)

- Differentiates between similar forms or multi-page packets

- Recognizes court vs. administrative vs. private notices

🔍 Field Extraction Accuracy

- Accurately extracts key fields: case number, names, court address, deadline, hearing date, reason for notice

- Handles scanned, handwritten, or mixed-format documents

- Outputs structured summary fields for reuse or downstream tools

✍️ Clarity of Summary

- Provides a plain-language summary of what the document is and why the person received it

- Breaks down consequences and obligations (e.g., “You must respond in 5 days”)

- Highlights urgent or unusual elements (e.g., demand for money, hearing scheduled)

🧭 Next Step Guidance

- Clearly outlines what the user should do next, by when, and how

- Links to appropriate forms, guides, or help services based on location and issue

- Differentiates optional vs. required next steps

🧠 User Understanding and Trust

- Information is presented in a way that builds user confidence, not fear

- Users report understanding the document better than before using the tool

- Reduces misunderstandings or failure to act due to confusion

🔁 Adaptability and Improvement

- Learns from user feedback or flagging of missed fields or errors

- Supports multiple jurisdictions, document types, and languages

- Offers providers a way to review flagged summaries or provide updates

Stakeholders mentioned possible metrics include technical accuracy and user value/understanding:

- Can it extract all critical facts?

- Can users understand the document better afterward?

- Did the tool surface actionable next steps?

- Did a human reviewer confirm accuracy and usefulness?

Respondents highlighted the risks of poor interpretation, and offered ideas for evaluating tool quality:

- Legal accuracy: Are interpretations correct, especially across jurisdictions?

- Readability: Is the explanation actually understandable to a lay user?

- Trust and transparency: Are disclaimers and human review available?

- End-user understanding: Can they take the next step?

“We’d want to test recall and comprehension: can the user explain back what this notice means and what they must do?”

“AI should explain why it thinks a field is important. Not just extract it.”

“A plain-language rewrite should not just simplify, but tell the user what action they need to take.”

Related Projects

Courtroom5 AI-Assisted Legal Documents

A platform that helps self-represented litigants manage civil cases and generate first-pass filings through an AI-guided, rules-aware document workflow, paired with training, community support, and optional limited-scope lawyer review.

LeaseChat

LeaseChat is a free tool that analyzes residential leases, answers questions with references to the document and local law, and drafts landlord communications.