Missouri Tenant Help Intake Screener

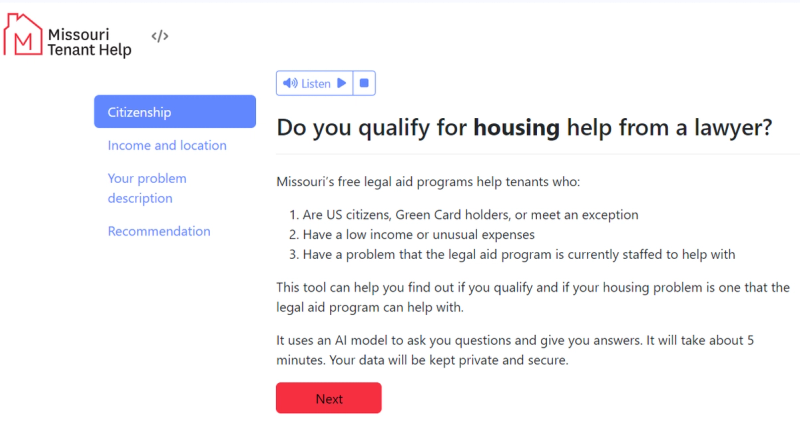

A hybrid rule-based and LLM-powered intake screener developed for Missouri Tenant Help to help determine legal aid eligibility more efficiently and consistently.

Project Description

This project explores whether large language models (LLMs) can support more efficient, accurate legal intake in civil legal services, specifically for housing cases in Missouri. It is a research and development initiative led by Quinten Steenhuis (Lemma Legal ) in collaboration with Hannes Westermann (Maastricht Law & Tech Lab), and built in partnership with Legal Services of Eastern Missouri and other Missouri legal aid providers. Funding was provided by the U.S. Department of Housing and Urban Development (HUD).

The project’s focus is on streamlining the initial screening process for legal aid eligibility, particularly for tenants facing housing issues. Legal aid programs often follow complex and evolving intake rules that determine whether a client qualifies for services. These rules are typically not public, vary by jurisdiction, and shift frequently based on funding and staffing constraints. Intake staff must interpret dozens of rules at once, often under time pressure and with limited technological support.

The Missouri Tenant Help system integrates a traditional Docassemble interview with a GPT-4-powered legal issue classifier and intake reasoning module. Fixed criteria (e.g., income, citizenship, location) are screened using rule-based questions. For more open-ended parts—such as interpreting a user’s description of their housing problem—the system uses GPT-4 to analyze the narrative, match it to legal intake rules, and generate follow-up questions if more information is needed.

The user-facing intake process is optional and designed to complement, not replace, existing phone-based intake. It provides applicants with a preliminary screening result, indicating whether their issue is likely to be accepted by a local legal aid group. If they meet the current intake criteria, they are directed to the relevant program and contact information. Importantly, the tool does not issue rejections—users are still encouraged to contact the program directly if uncertain.

The system was tested using 16 hypothetical scenarios, 3 different legal aid programs' intake rules, and 8 LLMs. GPT-4 performed the most consistently, achieving 84% precision in matching legal expert determinations. The LLM showed caution in borderline cases, often generating clarifying questions rather than prematurely rejecting potential clients. Accuracy was especially important in minimizing incorrect denials, and in some instances, the model outperformed human labelers in applying intake criteria.

Current limitations include overly cautious questioning when sufficient data was already available, and content moderation constraints in some LLMs (e.g., Gemini) that blocked responses on topics like domestic violence. The research team is exploring adaptive model switching, improved prompt design, and refining the balance between follow-up questions and user burden.

System Features

- Hybrid architecture: Combines Docassemble rule-based questions with GPT-4 follow-up logic

- Text-based intake rules: Legal aid programs can update intake policies by uploading plain text documents—no programming required

- Real-time triage logic: LLM classifies legal problem, matches against rules, and triggers clarifying questions as needed

- Data protection: Users are warned not to enter sensitive data; OpenAI's usage policy ensures no data is used for training

Deployment and Scope

The tool is currently live as a self-screening feature on the Missouri Tenant Help website and serves tenant applicants across Missouri’s four major legal aid programs:

- Legal Services of Eastern Missouri

- Legal Aid of Western Missouri

- Mid-Missouri Legal Services

- Legal Services of Southern Missouri

The screening tool supports housing-related issues only at this time. Future expansion may include other civil legal issue areas and integration with case management systems like LegalServer.

Current Status and Next Steps

- System is live and undergoing real-world testing

- User and provider feedback being used to refine LLM prompts and follow-up logic

- Ongoing analysis of cost-efficiency (~$0.05 per use) and error handling

- Planning for broader topic coverage, improved fact-gathering logic, and more customizable local deployments