Intake Interviewing

Help providers understand who they’re helping and what path to take by gathering and organizing a complete, accurate picture of the person and their situation.

Task Description

The goal of this task is to streamline intake by asking users structured questions, pre-filling forms, enriching user data (e.g. with court records or public benefits info), and generating summaries or eligibility flags.

When someone reaches out to a legal help provider or justice system service, the organization must quickly determine if they can assist, what kind of help is appropriate, and how urgent the situation is. This requires gathering details about the person’s legal problem, goals, background, preferences, and any eligibility factors or risk indicators. Doing this well takes time, staff skill, and careful follow-up.

This task centers on a system that serves as an intake and profiling assistant. It collects structured and unstructured data through forms, guided interviews, or document uploads. It asks clarifying follow-up questions based on the person’s responses, and it classifies the type of issue(s) presented—such as eviction, custody, wage theft, or benefits denial. It also flags priority indicators (e.g., deadlines, safety risks, housing status) and notes key demographic or accessibility needs.

The system then produces a structured, comprehensive intake summary that includes: legal issue type(s), urgency, eligibility considerations, user goals and preferences, service history (if any), and proposed next steps. It’s designed to equip the provider team with everything they need to decide what track the person should go on and how to respond effectively.

This task is especially useful in high-volume or low-capacity environments, where expert-level triage isn’t always possible for every new intake. It helps the team work faster and more accurately, and ensures the person’s story and needs are documented in a way that supports strong service decisions.

Success means the provider receives a complete, well-organized profile of the person and their situation—captured efficiently and accurately enough to begin meaningful service or triage.

Primary audiences: Legal aid applicants, intake teams, navigators, and attorneys preparing for first client contact.

Quantitative Summary of Stakeholder Feedback

- Average Value Score: 3.37 (Lowest among clusters)

- Collaboration Interest: 3.11 (Low-moderate)

This cluster scored lowest in perceived value, though not dismissed entirely. The hesitation seems to stem from sensitivity around user data, privacy, and the complexity of qualifying someone for legal aid.

Existing Projects

Some groups have been experimenting with parts of this idea:

- LegalServer’s intake form integrations

- Chatbot pilots that ask structured intake questions

- A few references to automated court record pull tools in housing or debt cases

- North Carolina’s triage and intake tools serve as partial foundations

- Some groups exploring auto-generated user summaries or client flags

“We’re starting to explore pulling in court case metadata automatically, but we’re not doing full profiling.”

“Some orgs have built tools to pre-fill intake from user behavior, but we haven’t adopted them yet.”

Technical Protocols & next Steps

- Standard intake question sets (demographics, legal needs, barriers)

- External data integration:

- Public court records

- Benefits eligibility APIs

- Past applications or service history

- Consent workflows: Users must approve data gathering

- Profiling logic:

- Risk flags, urgency indicators, potential service matches

“The data it gathers must be reviewable and editable — this isn’t a black box.”

“We ask trauma-informed, complex questions. I don’t trust AI to read the room or catch what’s not being said.”

- Use AI to support human intake, not replace it

- Focus on summarizing, organizing, and flagging, not filtering people out

- Ensure all data is auditable and overrideable

- Pilot with internal navigators before exposing to public users

“If it makes a screener’s job easier and doesn’t replace their judgment, it’s worth exploring.”

“I want a summary, not a sentence. The AI can prep, but humans should still steer.”

Stakeholder Commentary

There was a mix of interest and deep concern:

- Some see this as a “holy grail” to improve workflows and save attorney time.

- External data may be outdated, incomplete, or misapplied. Is the data reliable?

- Others worry about bias, privacy, and misclassification, especially in urgent or high-stakes scenarios.

- Concerns about automating human warmth and judgment during intake.

“We ask trauma-informed, complex questions. I don’t trust AI to read the room or catch what’s not being said.”

“There is potential here—if it's designed with humans in the loop and makes their job easier, not harder.”

“Profiling users can be dangerous — we don’t want AI deciding who’s deserving.”

“This feels like the holy grail — and that makes me nervous.”

How to Measure Quality?

🧾 Completeness of Key Fields

- Captures all required intake fields, including legal issue, dates, eligibility factors, and demographic information

- Prompts user to clarify missing or ambiguous responses

- Supports branching logic to ask relevant follow-ups based on earlier answers

🧠 Issue Spotting and Classification Accuracy

- Correctly identifies primary and secondary legal issues

- Flags related legal topics or systemic problems (e.g., debt tied to housing loss)

- Assigns categories that match internal program definitions or CMS tags

⏱️ Efficiency and Workflow Fit

- Reduces intake time for staff while improving quality of responses

- Allows intake summaries to feed directly into case management, routing, or referral systems

- Avoids overloading user with unnecessary questions

👤 User Context and Preferences

- Captures user goals, service preferences, language access needs, and communication barriers

- Includes relevant context such as disability, immigration status, or family care responsibilities

- Preserves user voice where appropriate while summarizing for the team

🔎 Readiness for Team Decision-Making

- Produces a clear, readable intake profile or dashboard view

- Highlights urgency, risks, or special conditions requiring priority attention

- Includes enough information for triage, eligibility review, or direct assignment

🔐 Confidentiality and Respectful Framing

- Respects user privacy while gathering sensitive data

- Frames questions in trauma-informed, culturally competent ways

- Avoids assumptions and flags areas requiring further human review

Respondents focused evaluation on both data quality and user experience:

- Completeness and accuracy of intake information

- Efficiency: How much time is saved for staff?

- User comfort: Are users willing to share more with AI?

- Bias and fairness audits: Is data gathered or interpreted in a discriminatory way?

“An AI is doing a good job if we end up with less back-and-forth, and better-informed first calls.”

“The data it gathers must be reviewable and editable—this isn’t a black box.”

“Measure how often staff have to redo or clarify what the bot captured.”

A possible rubric might involve:

- Completeness of intake: Are all needed fields collected correctly?

- Staff burden reduction: How much time does this save for humans?

- Accuracy of classification: Does it place users in the right workflow?

- Client experience: Do users feel respected, seen, and supported?

Related Projects

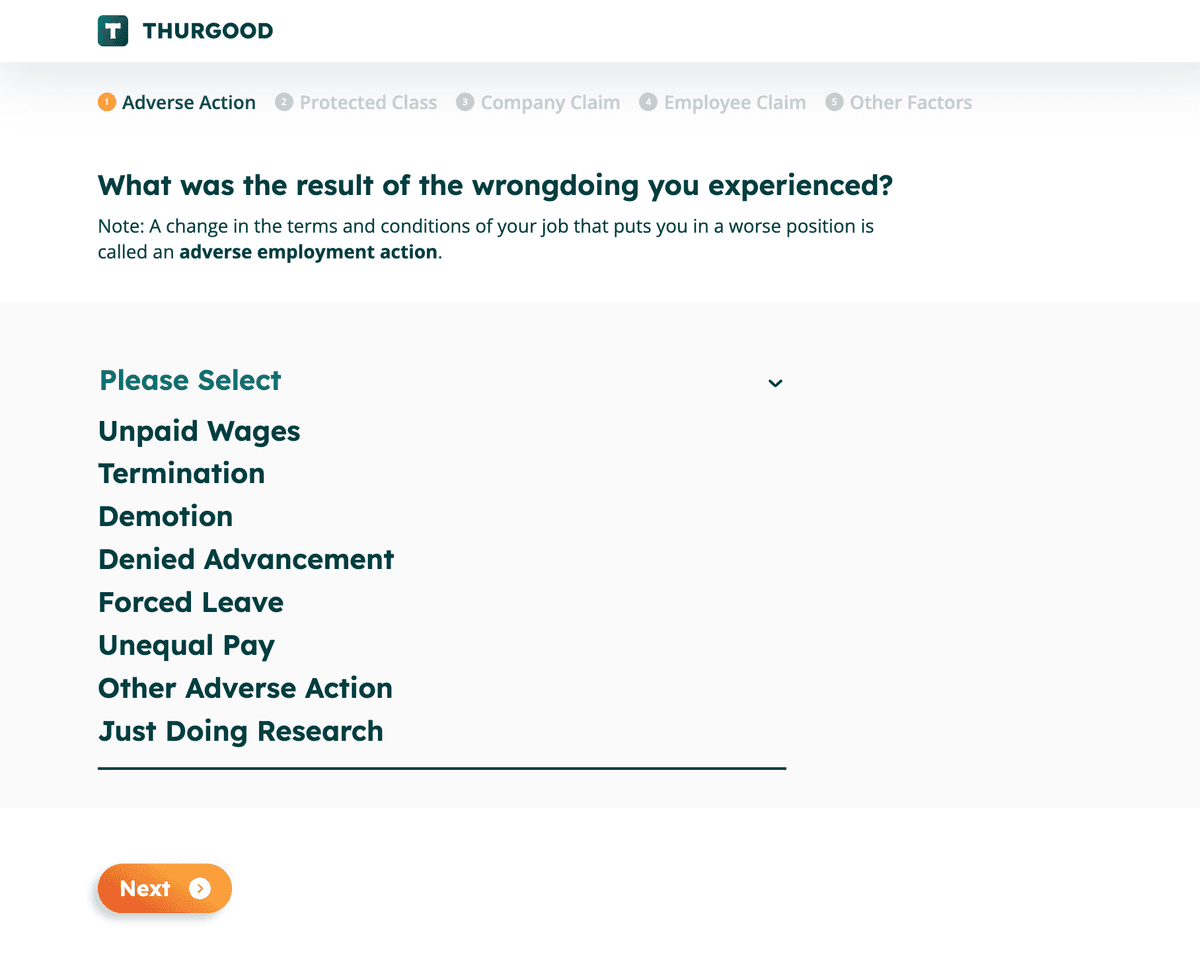

Ask Thurgood

An AI-driven intake and screening system for employment-law practices that automates most first-contact triage, produces structured applicant casefiles, and supports firms with human-reviewed quality control and automated client communications.

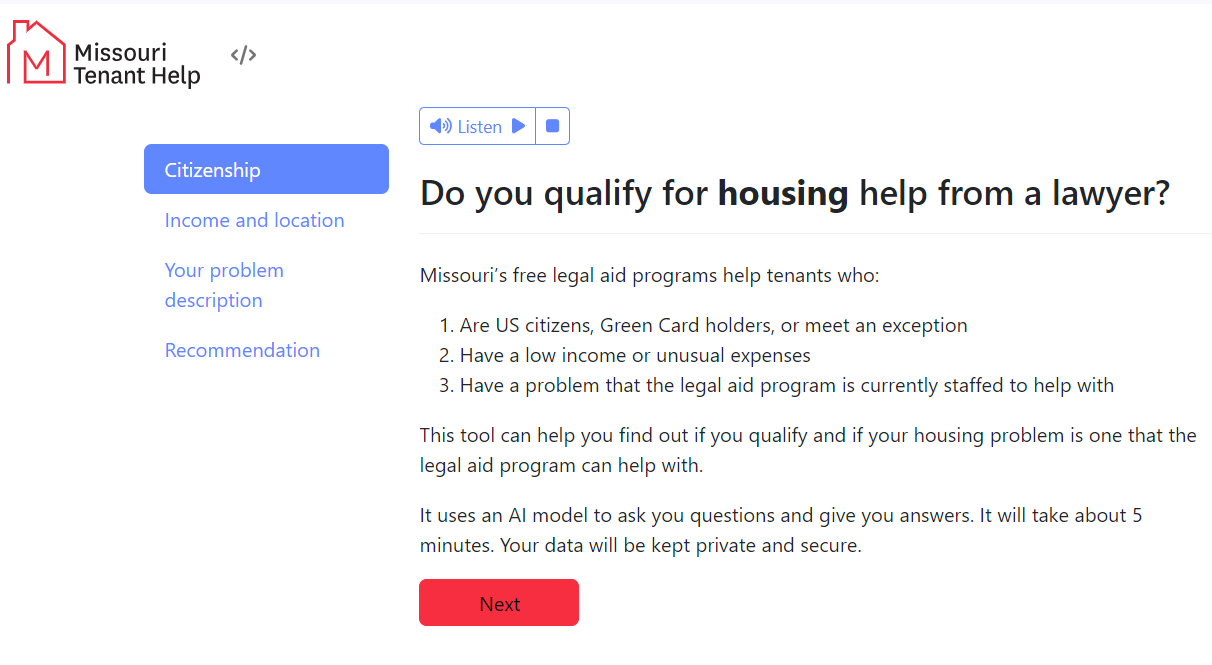

Missouri Tenant Help Intake Screener

A hybrid rule-based and LLM-powered intake screener developed for Missouri Tenant Help to help determine legal aid eligibility more efficiently and consistently.