Lease Data Extractor with AI

A research prototype to extract structured data from images of Tenancy Agreements (typed, neat, and sloppy) using AI

Project Description

Multi-Modal LLMs for Justice: Extracting Legal Data from Paper Forms

This project, led by Hannes Westermann and Jaromir Savelka, investigates how multi-modal large language models (LLMs) can help laypeople and self-represented litigants overcome the challenges of dealing with legal documents—especially when relevant information exists only in paper form. The study explores whether state-of-the-art models like GPT-4o can accurately extract structured data from images of handwritten or printed forms, even when the data is messy, incomplete, or captured in low-light conditions.

Problem Addressed

People navigating the legal system must often interpret and extract information from official documents like leases, benefit forms, and letters—many of which exist only in paper format. This is a major barrier to accessing justice. Tools like LLMs can help answer questions or fill out forms, but they usually rely on the user to type in the necessary information correctly. This creates friction and limits usability.

Approach

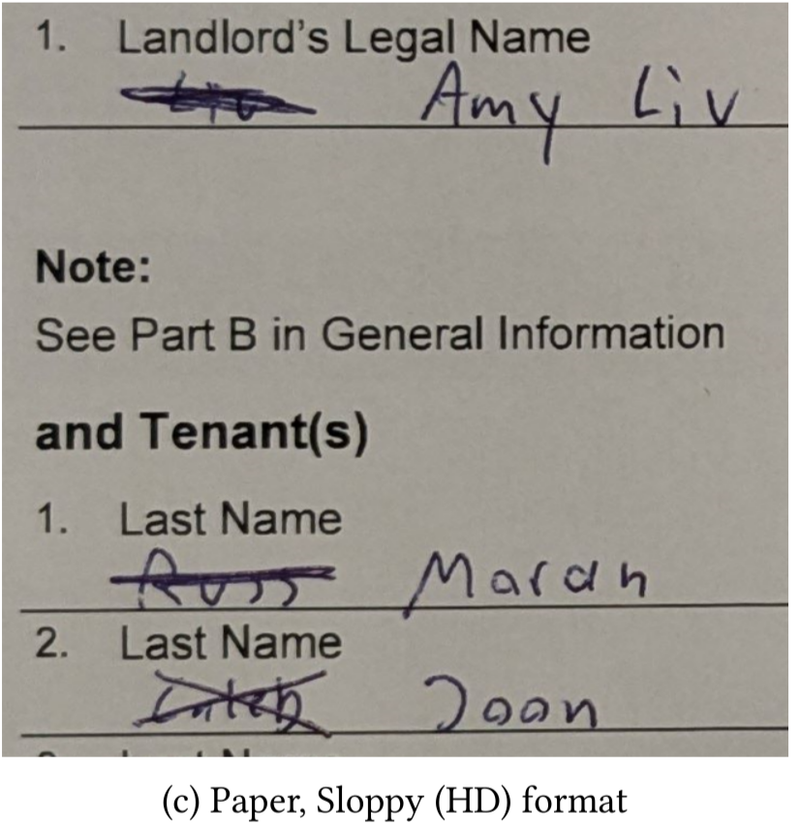

To test the potential of LLMs to solve this challenge, the team created a benchmark dataset consisting of filled-out versions of Ontario's Residential Tenancy Agreement (Standard Form of Lease). The forms were completed in three different information scenarios, each with varying levels of complexity and missing data. For each scenario, the form was rendered in five formats:

- Typed PDF (screenshot)

- Neatly handwritten, high-quality image

- Sloppily handwritten, high-quality image

- Neatly handwritten, low-quality image

- Sloppily handwritten, low-quality image

The GPT-4o model was given instructions and base64-encoded images to extract 14 key data fields, including landlord and tenant names, addresses, and unit details.

Key Findings

- Overall Accuracy: The model correctly extracted 73% of all fields across scenarios.

- Typed forms performed best: With nearly 98% accuracy, digital forms yielded near-perfect extraction.

- Messy handwriting and poor image quality reduced performance, but the model could still locate fields and partial content in most cases.

- Certain fields like city, province, and street name were more robust, while street number and uncommon names posed greater difficulty.

- Models favored common names (e.g., Jane over Jame), revealing potential bias embedded in token distributions.

Implications

This study is a promising step toward developing user-facing AI systems that can simply ask someone to “take a picture” of a lease or document, then help extract and reuse key information to:

- Populate forms

- Explain rights

- Generate legal drafts

It highlights multi-modal LLMs as a tool for reducing cognitive and technical barriers in justice processes, particularly for those without legal training or strong digital literacy.

Future Work

- Larger-scale evaluation with a broader range of forms

- Refining prompts and model selection to boost accuracy

- Integrating this capability into complete legal help systems

This work adds to a growing body of research demonstrating how AI and LLMs can increase access to justice—not just by interpreting law, but by helping people handle the messy paperwork required to use it.

Read more at the paper from Hannes and Jaromir: https://arxiv.org/html/2412.15260v1