A2J ImageGen: Visualizing Legal Documents with AI

An AI prototype to help transform complex court forms, fliers, and training materials from text into visual-first, well-designed materials for litigants

Project Description

A2J ImageGen is a prototype developed by Brian Rhindress at Georgetown Law to explore whether multimodal large language models (MLLMs), such as Gemini or ChatGPT, can be used to automatically reformat dense legal documents into visual, user-friendly formats. These documents include court forms, legal outreach fliers, and volunteer manuals. The overarching goal is to make legal information more understandable and accessible to self-represented litigants (SRLs) and the community members who help them.

Why it's important

Legal documents are often written in complex, inaccessible formats that deter the very people they are intended to help. Prior research in legal design, has shown that visuals—like annotated forms, diagrams, and comics—can greatly improve the usability of legal materials. However, designing these visuals has historically required time-consuming and costly manual work. A2J ImageGen investigates whether AI can speed up this process and produce rough visual drafts to help legal organizations develop more effective public-facing materials.

Details and specifics

The system uses prompt-based generation to convert legal content into visuals. It takes input documents such as California eviction court forms, public legal education fliers, and volunteer orientation manuals, and transforms them into graphics through structured prompting.

The specific visualizations were:

- A comic book for training self-help center volunteers

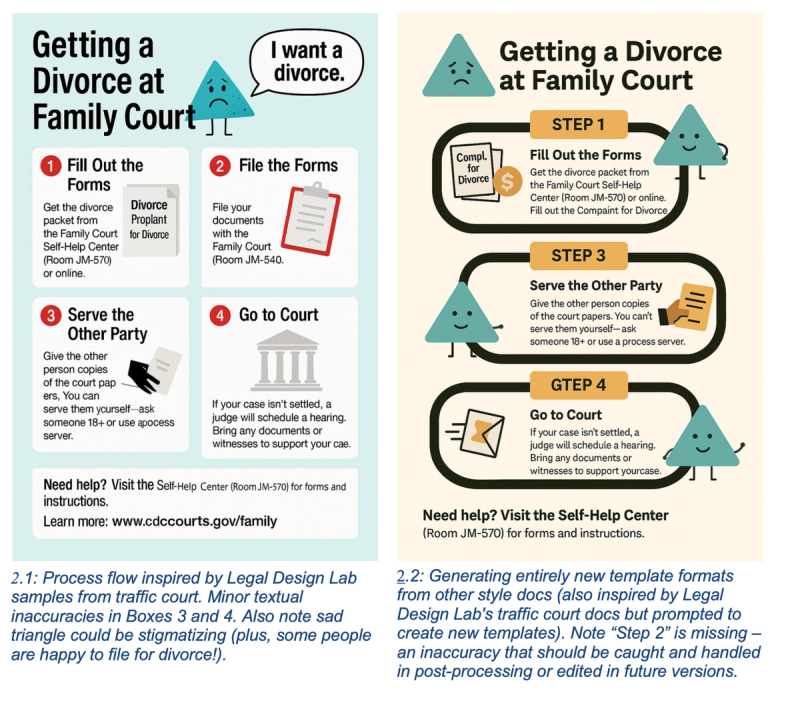

- A process flyer outlining steps of a custody case in Family Court

- A graphic instruction page as front matter for a court form

- A redesigned court form with in-line graphics

- A graphic summons/court reminder

- A graphic FAQ page for self-represented litigants

The prompts are crafted using best practices from Stanford Legal Design Lab, the U.S. Digital Service’s content design standards, and examples from the Graphic Advocacy Project. The generated outputs include visual callouts, comics, annotated forms, process flowcharts, and timelines.

- The model used is OpeAI GPT-4o.

- The configuration followed a sequence of prompts.

- First, the prompt asks the agent to extract key lessons using text. It is about excerpting, synthesizing, and organizing content well.

- Then, the next prompt directs the agent to lay the content out into a particular visual template, like panels of a comic with characters, or a flier following an attached example template, or following some key, well-defined visual principles including around layout, font size, colors, characters, alignment, and more. It should also ask to insert the extracted content from the earlier prompt-step.

- Once the agent generates images, then the next prompts can be used to refine, correct, simplify, and address other problems that result. The next prompt can even be used as self-critique, to have the agent hold the previous designs up to visual and language design standards. It can give feedback based on these design heuristics.

What can be used now

The current version of the tool is a working demo available at a2j_imagegen. Legal aid and access to justice teams can use it as a rapid ideation and prototyping tool. By pasting their legal content into structured prompts, they can produce a wide range of visual formats that help spark ideas for how to redesign information for the public.

Although it is not yet integrated into a full product with an API, the prototype shows how targeted prompting strategies can yield surprisingly rich first-draft visual content. Teams can try out the prototype to generate initial visual prototypes of how to convert their text trainings, forms, and fliers into more visual & user-friendly resources.

What testing has revealed

Early experiments with the tool have shown that AI-generated visuals can provide inspiration and speed up the design process. However, the outputs are not always consistent in layout logic—common issues include misaligned checkboxes, hallucinated text, and awkward use of graphic elements. These limitations mean that while the tool is valuable for brainstorming and prototyping, human oversight is essential before any content can be used directly with clients or submitted to a court. Overall, A2J ImageGen demonstrates the promise of AI-assisted visual design but also underscores the need for robust standards, templates, and review protocols in this emerging space.