Fines & Fees Data Researcher

An AI-powered agent that helps legal aid teams retrieve and organize a person's fines and fee data from across Oklahoma court dockets, to produce reports for the teams as they work on getting criminal justice debt waived.

Project Description

The “Fines & Fees Data Researcher” is an AI co-pilot developed by Stanford Legal Desigin Lab with Legal Aid Services of Oklahoma (LASO) to help a reentry legal team quickly assemble a comprehensive report of a client’s criminal-court financial obligations (fines, fees, and payment history) across Oklahoma counties.

The tool’s purpose is to reduce the manual effort of searching multiple public systems and to return a structured report that legal teams can use in cost-reduction or waiver work. At a high level, the tool queries court data sources for a specific person's records, organizes what it finds by county and case, and outputs a verification-friendly summary that flags uncertain matches and potential issues for human review.

The problem to be solved

The problem it addresses is straightforward but time-consuming in practice: for a single client, staff must determine where charges exist statewide, what amounts remain, which non-court obligations may apply (e.g., DA supervision fees or restitution), whether there are active warrants, and what payments (if any) have been made.

Today, this requires multiple searches in two public court systems—OSCN (covering all counties, including imports) and ODCR (native interface for KellPro counties)—plus inspection of e-payments views and docket PDFs to reconstruct the total picture. The tool aims to centralize that legwork into a repeatable workflow.

The value of the tool

This tool automates data collection from key state and county databases, identifies outstanding fines, categorizes fee types, tracks payment history, and presents the findings in a structured format. The key user of the tool's output is the legal team member (often the lead lawyer) who will review the report to decide in which counties to take action, what to request, and how to strategically proceed.

It is designed to reduce the burden on legal teams, enhance accuracy, and improve case strategy by surfacing all relevant financial obligations across jurisdictions. The agent also flags uncertainties, such as data gaps or common name conflicts, and provides transparent, verifiable output for legal review.

Development of the tool

The project is a collaboration between LASO’s reentry attorneys and paralegals and the Stanford Legal Design Lab, with engagement from external data partners who have long worked on Oklahoma court data. The core LASO group includes attorneys and staff who focus on women’s reentry, county cost-docket work, and diversion-hub representation; the initiative also coordinates with the Oklahoma Policy Institute data team and public defenders who confront similar data-gathering challenges.

Following initial design research with LASO legal staff, the project team then identified core technical requirements and mapped the existing manual workflow used to gather fine and fee data. These findings informed a phased development plan focused on replicating and enhancing key steps through automation while embedding human review checkpoints throughout the workflow.

After we established our technical setup, then the next phase of development involved building data connectors to related databases and the organizations that run them. Ensuring responsible and secure access to sensitive information is a top priority. After the app and data connections were established, then the tool went into sandbox testing.

Technical details & workflow

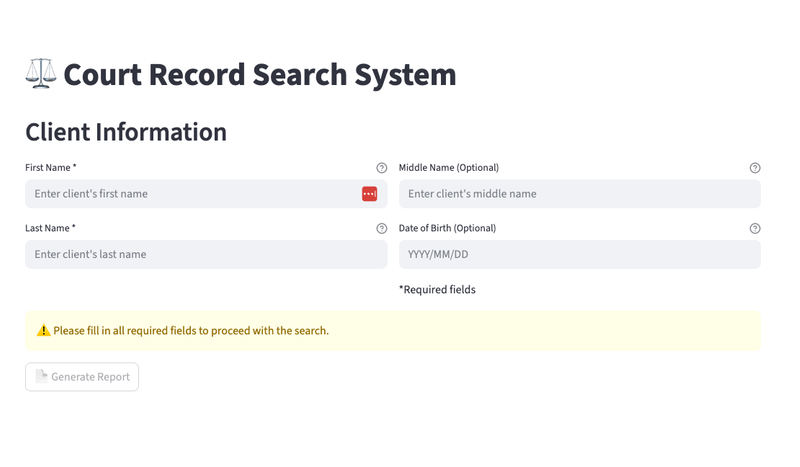

The tool is a web application that accepts a small set of identifiers (e.g., name, DOB) and then programmatically queries approved public sources (OSCN, ODCR, and related datasets).

Early iterations focused on reliability, standardized outputs, and deployability: the team moved the agent to a Pydantic-AI framework to guarantee schema-consistent results, introduced a static IP to enable source whitelisting, and added authentication and session persistence so that results can be saved and revisited.

The current pilot offers a progress view for long running queries, download of generated reports, and session-scoped deletion, with ongoing work to harden identity, storage, and monitoring.

The core workflow is:

- staff enter basic client details;

- the tool scans OSCN/ODCR court databases to enumerate counties and case numbers potentially relevant to this cleint;

- staff can select cases for detail;

- the tool compiles per-case summaries (offense/charge, disposition, total amounts owed), highlights non-court obligations when detectable, and pulls itemized payment history where available.

- It also flags red-flags (e.g., warrants or accelerate applications) that could affect suitability for a waiver docket.

- The tool creates the output report. This report follows LASO’s existing work product structure so it can slot into attorney review and hearing preparation.

Data realities and access constraints are part of the design: OSCN and ODCR differ in coverage and in which payment fields are visible to the public; ODCR often surfaces itemized totals more readily for KellPro counties, while OSCN imports can lack summary fields. Some payment details appear only in e-payment screens or PDFs, and public-defender credentials may expose additional fields that LASO cannot see. The team is pursuing appropriate whitelisting, working practices that respect site terms, and a verification flow that “shows its work” so advocates can validate any figure before relying on it in court.

Development has been iterative. An initial v0.1 pilot shipped to collect feedback on reliability, throughput, and formatting. The team then migrated frameworks to improve consistency across cloud providers and added basic observability to measure success/failure modes during each run. LASO’s attorneys supplied real-world test cases and curated client document sets (with redaction plans) to support evaluation loops, while the project chartered a phased plan that moves from workflow mapping and data preparation to pilot integration and formal evaluation.

Outcomes and Measurement

Expected outcomes are pragmatic:

(1) measurable time savings on the “find everything” research step;

(2) more consistent, reviewable reports that reduce rework;

(3) better screening for waiver-appropriate cases; and

(4) a clearer hand-off into downstream drafting (affidavits/motions) and hearing preparation.

The team is also documenting county differences and edge cases to inform statewide scaling and to define evaluation checklists (completeness, accuracy, error handling, and verification) that can be reused by other jurisdictions.

Quality Rubric

Signs of a Good Job:

- Retrieves all court records with relevant fee and payment data from authorized sources.

- Accurately categorizes fees by type and county.

- Includes a complete payment history and notes on current balances.

- Clearly flags any uncertainty or ambiguous data.

- Handles common name errors, aliases, and old formats.

- Produces transparent, annotated reports that follow current legal workflows.

- Offers links to source materials and explains calculations for legal review.

Signs of a Bad Job:

- Misses relevant data or retrieves incorrect records.

- Fails to distinguish between individuals with similar names.

- Incorrectly totals fees or omits historical payments.

- Does not flag missing or ambiguous data.

- Provides incomplete or confusing output that requires significant manual review.

Status and Next Steps

The Fines & Fees Data Researcher is the 'sandbox pilot' stage. We are testing it out with the legal team to measure the quality and safety of its performance.

To ensure quality and build trust in the tool’s output, the pilot will follow a human-AI team model. Legal staff at LASO will continue to supervise each case, using the tool’s summaries and documentation to verify results and make legal decisions. The agent will provide not only raw data but also detailed annotations, confidence scores, and links back to original records. These features are designed to support expert oversight while reducing the research and analysis burden on staff. Pilot testing will allow the team to evaluate the tool’s reliability, improve its performance on ambiguous or edge cases, and iterate on interface design based on direct attorney and paralegal feedback.

Over the coming months, the team will evaluate the prototype against real case examples, compare results with manually collected data, and refine the rubric for successful performance. A broader goal is to develop a generalizable model for how AI agents can assist in data-intensive legal aid workflows—starting with fines and fees research, but potentially extending to other domains like benefits screening, criminal record expungement, or consumer debt analysis.