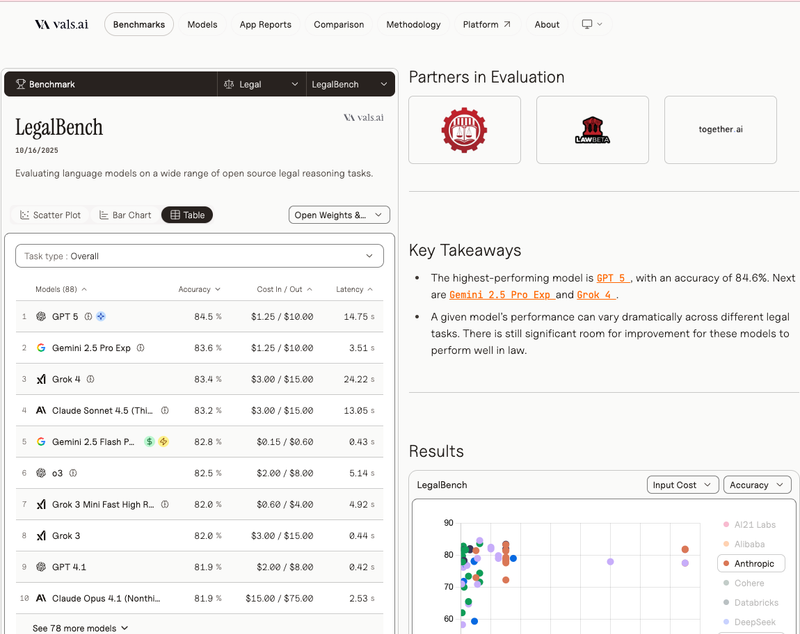

VALS.ai LegalBench Leaderboard

A live, third-party leaderboard tracking how frontier and open models perform on LegalBench tasks—handy for quickly narrowing model choices for legal pilots.

Description

VALS.ai runs models on the LegalBench suite and publishes side-by-side results, with periodic snapshots and commentary. Because performance varies widely by legal task type, the leaderboard helps evaluators pre-screen candidates (e.g., Claude, GPT, Gemini, Grok, Qwen) before deeper, workflow-specific testing.

Treat these scores as directional: use them to create a shortlist, then validate on your own benchmarks (actionability, legal-fidelity, deadlines, language parity) and your jurisdiction’s forms and rules. VALS also rolls these results into a broader public benchmarking hub you can reference across projects.f